This is my second post in the Did You Know series, tying into Pam Misialek's series. This feature allows users to create a one-off backup of a database from within Dynamics GP and save it either locally or on Azure storage. Originally introduced in GP 9, the Backup to Azure was added to this feature in GP 2013 R2.

I've never tried this feature before, as I did not need to in the past. One great thing about this DYK series is there will be things I'll be trying that I may not have had an opportunity to try yet!

While this obviously wouldn't be the normal way to create a backup, sometimes this could come in handy for specific purposes, like for consultants preparing for demos that want a quick backup of what they've prepared before they test a configuration, so they can re-use the data easily after restoring. I know I don't bother with setting up scheduled SQL jobs for backups on my environments unless I'm mimicking something for a client. So, for that type of situation, I can see this would be a great way to simplify creating a backup without bothering to open SQL Server Management Studio when I'm right in the middle of working on some project.

Summary

URL: https://community.dynamics.com/blogs/posts (did you know backups link)

Version: available since Dynamics GP 2013 R2 (with Azure option)

Interesting trivia! "Scheduled Backups" were introduced as a feature in GP 9, which included some actual "scheduling" features, as the name indicates. In GP 2010, the "scheduling" functionality was stripped, and it became just a "Back Up Company". The window roughly resembled the current window except no options for where to store, so your only choice was entering a pathname for the local storage.

Requirements: there are a few requirements to use this feature.

- This feature is only available when using the Dynamics GP client *on* the SQL server instance. Like I said above, great for consultants, but not necessarily that useful for other situations. (if you're already on the server, why not just use SSMS?)

- The user logging into Dynamics GP must be a system administrator on the SQL server. Hey, back to "great for consultants" again, who tend to use 'sa' all the time even when they don't need to!

- If using the Azure storage option, you must be using SQL Server 2012 SP1 Cumulative Update 2 or later. Say that 10 times fast!

- Make sure your internet speed and upload/download speed can handle sending and receiving files of the sizes of your backups to Azure. In my testing, a "basic" home internet wireless connection was completely inadequate, but a "high speed" wireless home internet connection was fine. That tells me most business networks would likely be fine, but in researching issues I ran into, I read some posts where people commented that network limitations were a deal-breaker for them. YMMV. My "home internet" connection speeds tonight were this:

How To

First hint: you won't find this under what I would consider the obvious places! I would have thought this would appear under Utilities > System on the Administration navigation pane, but it's considered "maintenance". So, this window is located under Microsoft Dynamics GP > Maintenance > Backup.

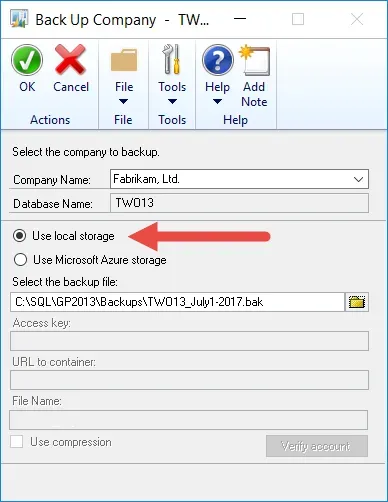

Option 1 is creating a local backup. In case you have not used this yourself, here's how the original functionality worked.

- Open the Back-Up Company window, select which company to back up, and choose Use local storage.

- Accept the default path & filename or browse and create a new one. I browsed to a shorter path for illustration purposes but left the .bak filename as auto-generated by Dynamics GP. I likely would overwrite this if doing it for real though, I don't like the default file naming convention they have here!

- Click on OK to create the backup and, assuming you have the necessary SQL permissions, your backup will be created.

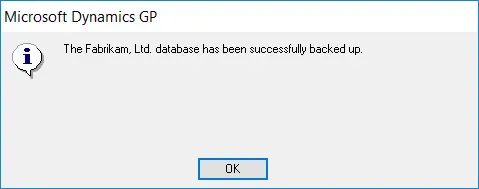

- After the backup is done, a message box will appear to tell you it was successful.

One thing I don't particularly like about the way this feature was designed, is that the window closes when you click OK to make the backup. That means, if you want to create multiple database backups, you must continually navigate and re-open the window and enter your settings again. I wrote up a Microsoft Connect suggestion (link removed, no longer valid) for some ideas on how this functionality could be made even better.

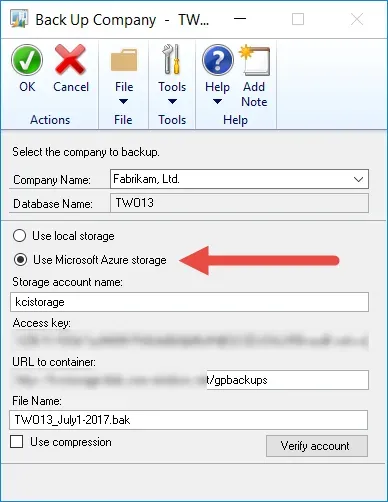

Option 2 is the new feature, added in GP 2013 R2, creating a backup to Azure Storage.

- It's fairly simple, or so I thought, assuming you already have set up an Azure Storage account and blob container.

- Here's a bit more info with links to further help, from the original "new features" blogs on the Microsoft support site.

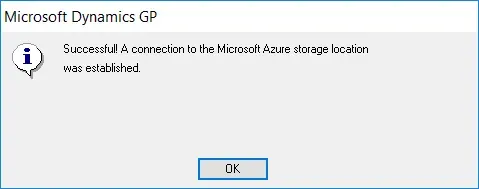

Test your connection by clicking Verify Account. You should receive this message if it works.

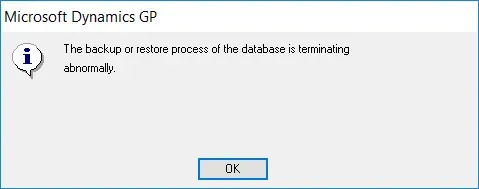

In my testing, the backup took WAY longer than the local backup did, measured in minutes as opposed to seconds with local storage, which makes sense of course, since it's not local. However, my attempt then failed after exactly 3:00 minutes with a message (below).

The internet connection where I started doing the testing for this post wasn't the greatest, it is pretty much your basic entry-level home internet WiFi. It turns out, that was the reason for the failure as trying the same thing again from home was successful just like the local backup option (albeit it took longer because it's backing up to an internet location).

Recap

So, I was ultimately successful with the Azure side of things from the GP interface, but I went through quite a bit of hassle before I realized my internet connection was to blame. I could see the .bak files in my Azure storage account, but they all said they are 1TB in size! I believe it defaults to an initial size of 1TB until the size is verified, but if it fails, the interface shows that as the final size.

I tested with both GP 2013 R2 and GP 2015 R2, just in case there was an issue with GP at a certain point. I then decided to test from SQL Server Management Studio, using the Backup to URL feature (same thing). I configured a Credential and attempted the backup and it also failed. I then decided to try backing up a small database, I chose the "model" db just because it's quite small, and it backed up successfully to Azure. That was great news, as that told me my configuration was correct, and narrowed down the possible issues list a little further. I even tried straight-up uploading a SQL .bak file to my storage container and it failed too so it became more obvious what the issue might have been.

While working on this, my Azure storage container showed locked leases on the uploads that failed, which I couldn't delete until I went through the steps in this article. The script looked intimidating but actually worked like a charm with 4 input parameters, and afterwards, I was able to delete the test backup files from my blob container storage.

Now that I have run through all of the above scenarios from my regular home-office network, everything works as expected and the file sizes in the Azure storage are more realistic, I can delete the backups through the Azure portal the normal way if I don't need them any longer.